@article{jiang2023cinematic,

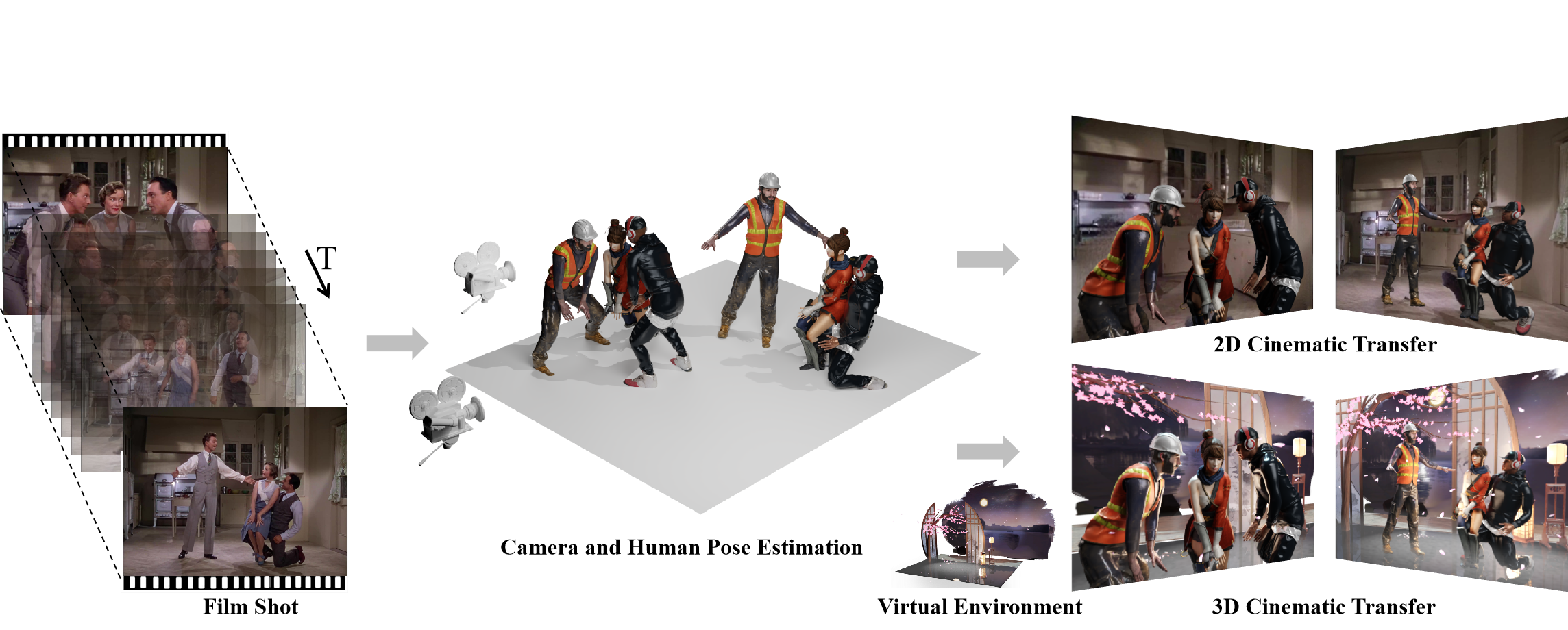

title={Cinematic Behavior Transfer via NeRF-based Differentiable Filming},

author={Jiang, Xuekun and Rao, Anyi and Wang, Jingbo and Lin, Dahua and Dai, Bo},

journal={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.},

year={2024}

}