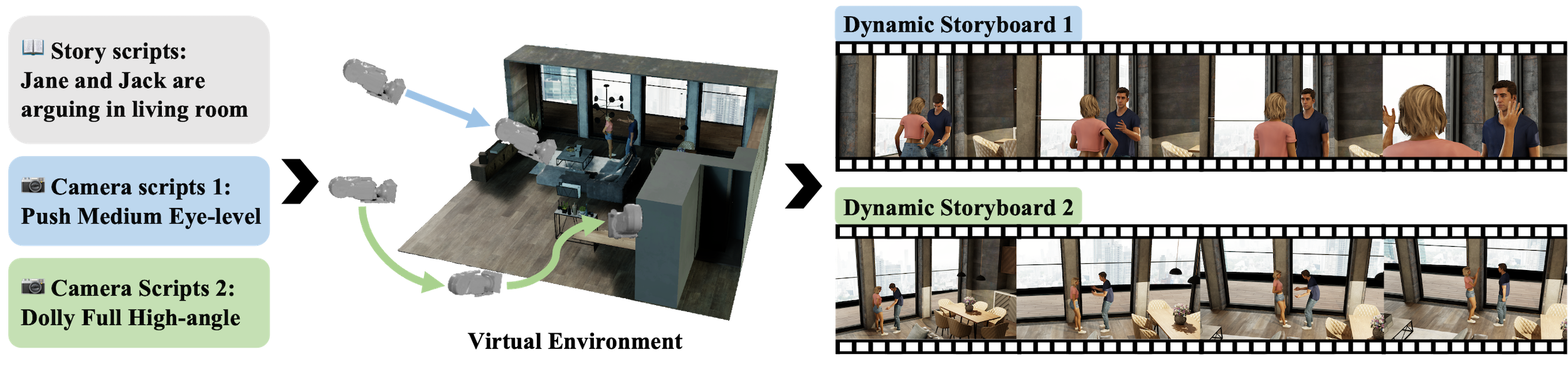

Virtual Dynamic Storyboard runs on a “propose-simulate-discriminate” mode: given a formatted story script and a camera script as input, it generates several character animation and camera movement proposals following predefined story and cinematic rules⚡️.